Case study: Field trial of multi-layer slicing over disaggregated optical networks enabling end-to-end crowd-sourced video streaming

Optical networks face an increasing need to support new services with fast-response, real-time and on-demand requirements. Researchers at University of Bristol are experimenting with Crowdsourced Live Video Streaming (CLVS) over the NDFF optical network testbed to support the high-capacity and low-latency requirements for the large amount of traffic generated.

The next generation of networks aims at supporting new services with fast-response, real-time and on-demand requirements. A Crowdsourced Live Video Streaming (CLVS) is an example of such Network Service (NS) in which thousands of users attending an event stream video from their smartphones to a CLVS platform. The content from all the users is edited in real time, producing an aggregated video, which can be broadcasted to a large number of viewers. We aim to provide such network service with stringent requirements.

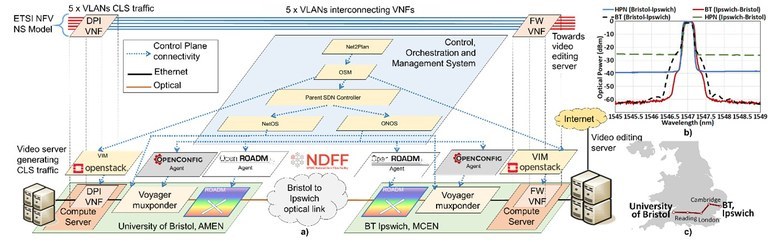

At University of Bristol, the experimental demonstration of CLVS NS is undertaken with Virtual Network Functions (VNF) orchestration and the Control, Orchestration and Management (COM) system implemented over the NDFF disaggregated optical network testbed (Fig. 1). CLVS benefits from this infrastructure, since its COM system can rapidly provision a slice on top of the end-to-end (E2E) compute/network resources and support the high capacity and low latency requirements for the large amount of traffic generated.

Fig 1. a) Testbed for CLVS application, b) optical spectrum Bristol-Ipswich link, c) UK optical connectivity: Bristol – Ipswich via NDFF.

In the data plane, the SDN enabled nodes with compute servers host VNFs, multi-layer packet and optical networking capabilities. At each node, the compute server is connected to a multi-layer Voyager switch which can switch VLAN-tagged Ethernet traffic on a client port towards the optical network on a DWDM coherent transponder. The Voyager switch is further connected to a ROADM at each node and software agents are used to control the Voyager and ROADMs to enable disaggregation in the optical network. The nodes are hosted at the HPN lab, University of Bristol, and BT Research Labs in Ipswich. Two sites are interconnected via NDFF and the Cambridge-Ipswich link with 530km fibre in total. The Voyager provides 100Gb/s data rates PM-QPSK modulated signals. The measured pre-FEC was 1.33×10-3 for the Ipswich-Bristol link, and 4.25×10-5 for the Bristol-Ipswich link.

A server at HPN generates video streams, emulating CLVS traffic, on multiple VLANs towards the video editing server at BT after traversing the VNFs and the metro network. The video editing server aggregates the received video streams and broadcasts to a live streaming platform (twitch) via Internet. For the control plane, the COM system provisions a slice of overall resources by mapping each VNF and virtual link into actual deployed virtual machines and connectivity over the metro network using a hierarchy of services. The setup time for the COM system is averaged within 5 minutes where the obtained times are dependent on the number of links, nodes and VLANs. When comparing these results against the 5G PPP KPI with service creation time of 90 minutes, it is demonstrated that the presented solution can meet the specification.

This work has been funded by the European Commission [METRO-HAUL : G.A. 761727].

For further information, contact Prof. Dimitra Simeonidou, ndff@ee.ucl.ac.uk

Published: 01 February 2021